Building a Transparent Proxy in AWS VPC with Terraform and Squid

Terraform Tutorial: Build a Transparent Proxy in AWS VPC

In this article I’m going to be setting up an example network and deploying a transparent proxy to it.

To make this repeatable and to show exactly how it can be deployed in AWS VPC, I am using Terraform.

Terraform is an excellent tool for describing and automating cloud infrastructure.

All of the terraform code in this project can be found here: https://github.com/nearform/aws-proxy-pattern I have chosen to use AWS for this article and the associated terraform code, but the concept is very general and terraform code could be written for any supported IaaS platform (e.g. Google Cloud, Azure, etc.)

A quick Overview

Let me start by defining some terms and explaining what a proxy is good for.

A transparent proxy is one where there is no configuration required on the applications using the proxy. The hosts may have some routing configuration to make it work, but applications are unaware.

This is in contrast to an explicit proxy, where applications are made aware and direct their traffic to the proxy’s IP address e.g. via a browser PAC (proxy autoconfiguration) file.

In large deployments, systems like Windows Group Policy and WPAD (PAC file discovery via DHCP or DNS) are used to configure a large number of hosts automatically.

Using a proxy can provide a degree of control over outbound web traffic.

For example, the proxy can monitor and keep audit logs of that traffic, or intervene and block traffic that is likely to pose a threat to the network.

There is also a very significant non-security related benefit in the form of caching, where repeated downloads of the same content can be served from the proxy instead.

However by intentionally making a proxy the sole means of internet access, a bottleneck and single point of failure is introduced, so typically some kind of high availability setup would be used, such as a load balancer and proxy group.

Historically, web proxies have been most useful to system administrators in corporate networks, but even a network hosting something like a SaaS product in a microservices architecture can benefit.

One of the first things a piece of malware will do (perhaps after attaining persistence on a host) will be to contact command and control infrastructure over the internet.

A proxy can make you aware of this, block the attempt and give you a chance to identify the affected machine.

I chose Squid for the proxy software. Squid is a caching web proxy and is a very popular and mature project. It has been around for more than 20 years and is easy to install and configure.

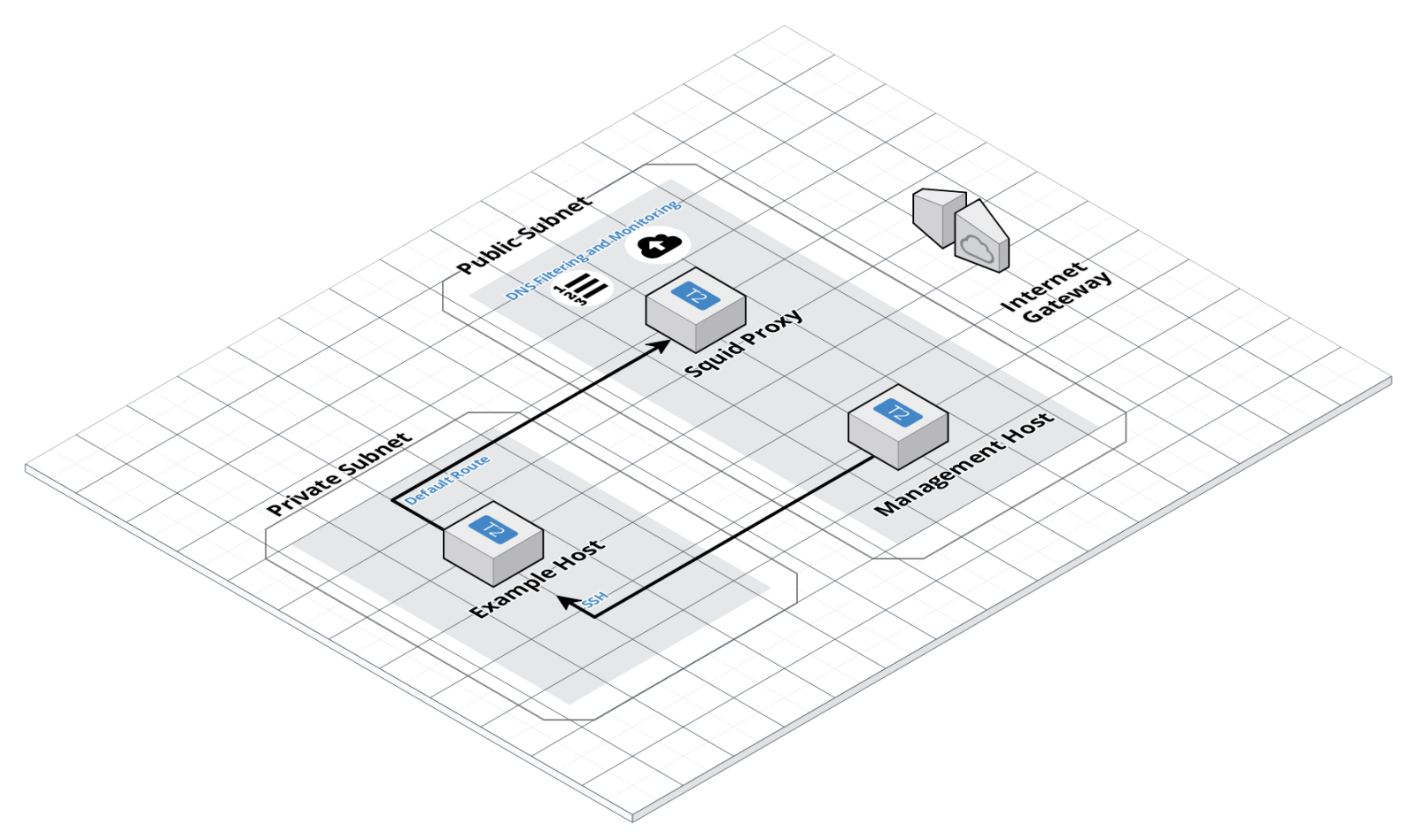

The diagram above shows the key components - a private subnet with an example host that will be trying to make requests to the internet.

The proxy receives traffic via a network default route, logging requests and filtering based on domain name.

A management host is used just to provide a means to SSH to the example host, which only has a private IP address.

Management networks are a topic for another article - but this simulates something which would normally happen over a VPN, if at all.

Routing

There is a private subnet with a default route to the proxy’s network interface. This means all internet-bound traffic will arrive at the proxy server first.

resource "aws_subnet" "private_subnet" {

vpc_id = "${aws_vpc.aws_proxy_pattern_vpc.id}"

cidr_block = "${var.cidr_private_subnet}"

tags {

Name = "aws_proxy_pattern_private_subnet"

}

}

resource "aws_route_table" "private_routes" {

vpc_id = "${aws_vpc.aws_proxy_pattern_vpc.id}"

tags {

Name = "private_routes"

}

}

resource "aws_route" "proxy_route" {

route_table_id = "${aws_route_table.private_routes.id}"

destination_cidr_block = "0.0.0.0/0"

network_interface_id = "${var.proxy_network_interface_id}"

}Disabling source and destination checks on the proxy instance is also necessary to allow it to receive traffic not destined for the instance’s own IP address:

resource "aws_network_interface" "proxy" {

subnet_id = "${var.subnet_id}"

# Important to disable this check to allow traffic not addressed to the

# proxy to be received

source_dest_check = false

}Finally there are some iptables rules on the proxy instance which redirects the packets into the squid server (which is listening on ports 3129 for HTTP and 3130 for HTTPS).

From there, the fact that the proxy instance has a public IP address and the default route for the public subnet is the VPC’s internet gateway is sufficient to send the traffic out to the internet:

iptables -t nat -I PREROUTING 1 -s 10.0.2.0/24 -p tcp --dport 80 -j REDIRECT --to-port 3129

iptables -t nat -I PREROUTING 1 -s 10.0.2.0/24 -p tcp --dport 443 -j REDIRECT --to-port 3130Provisioning the Proxy Instance

There were many ways I could have provisioned the EC2 instance used for the proxy.

In this excellent article on the AWS security blog, the proxy was built from source on the running instance.

Many package managers like apt or yum have a squid package available, though you often don’t get to run the latest version or with particular compiled-in features.

There are Chef and Puppet modules for squid to follow a more ‘declarative’ paradigm, where I specify the machine configuration I need and let the provisioning layer translate that into ‘imperative’ commands to achieve the goal.

Whichever provisioning method is used, a machine imaging solution like Packer could be used to build an AWS AMI ahead of time. This could be particularly useful to get an instance serving traffic quickly after boot, which is very useful in a load balanced proxy group setup.

In my case, I opted to use my own docker image, based on Alpine Linux and using the apk package manager to install squid. This produced an image around 15mb in size, and along with terraform, contributed to making this repeatable for others.

Just pulling a docker image and running it is a lot easier and repeatable in a variety of environments compared to the alternatives.

It also allowed me to try out some interesting solutions that NearForm are working on for vulnerability scanning of docker images, and it was good to see that the low attack surface of Alpine fed through to a clean bill of health on my image (at least for now).

The server still needed some provisioning to get to the point where it can run the squid container, and I used AWS user data scripts for this, which installs Docker, creates the squid configuration file, sets up an x509 certificate that squid needs for SSL inspection, runs the squid container and creates the aforementioned iptables rules.

resource "aws_instance" "proxy" {

...

user_data = "${file("proxy/proxy_user_data.sh")}"

...

}Which runs this script on instance boot.

Proxy Configuration and SSL Inspection

The squid configuration looks like this:

visible_hostname squid

# Handling HTTP requests

http_port 3129 intercept

acl allowed_http_sites dstdomain .amazonaws.com

acl allowed_http_sites dstdomain .security.ubuntu.com

http_access allow allowed_http_sites

# Handling HTTPS requests

https_port 3130 cert=/etc/squid/ssl/squid.pem ssl-bump intercept

acl SSL_port port 443

http_access allow SSL_port

acl allowed_https_sites ssl::server_name .amazonaws.com

acl allowed_https_sites ssl::server_name .badssl.com

acl allowed_https_sites ssl::server_name .security.ubuntu.com

acl step1 at_step SslBump1

acl step2 at_step SslBump2

acl step3 at_step SslBump3

ssl_bump peek step1 all

ssl_bump peek step2 allowed_https_sites

ssl_bump splice step3 allowed_https_sites

ssl_bump terminate step3 all

http_access deny allMost of the configuration is straightforward and sets up ports and domain whitelists. The interesting part is the ssl_bump directives.

Intercepting HTTPS traffic is basically a form of Man-in-the-Middle attack so to avoid certificate warnings and client rejections, a proxy that wants to decrypt HTTPS traffic usually works by having clients install a root certificate (owned by the proxy) in advance, and issuing new certificates signed by this root for HTTPS domains on the fly.

This is a complex topic and is a bit of a security ‘can of worms’ which you can read more about here .

For this article, I’m doing something less controversial and just doing domain name filtering during the TLS handshake rather than decrypting traffic.

This is where the ssl_bump directives come in:

- Step 1 is the initial TCP connection.

- Step 2 is the TLS ClientHello message, where the client may specify the domain name they want to contact as part of the Server Name Indication extension.

- Step 3 is the TLS ServerHello message where the server provides a certificate which will include the domain name of the server.

By asking Squid to peek, peek and splice respectively, I’m instructing it to open an onward TCP connection, forward the ClientHello and make a note of the SNI domain (if any), extract the domain from the ServerHello and then make a decision.

Either it will splice the rest of the connection (pass through without decryption), or it will terminate the connection based on domain name.

Squid did a great job of explaining this peek and splice method in their online documentation .

Testing the Proxy

Bring up the Infrastructure

So that was plenty of theory! Let’s see if it actually works.

I bring up the infrastructure using terraform plan and terraform apply , ensuring that I have AWS credentials set up at ~/.aws/credentials with a profile called default .

Once the infrastructure is created, I should see some IP addresses in the output:

Apply complete! Resources: 13 added, 0 changed, 0 destroyed.

Outputs:

host_private_ip = 10.0.2.132

management_host_public_ip = 18.130.203.68

proxy_public_ip = 18.130.227.27Open Ssh Connections to Run the Tests

Then I open an SSH connection through the management host to the example host, ensuring that I avoid the common pitfalls:

- The AWS security group for the management host needs an SSH rule from your IP to allow inbound SSH access.

- The username for Ubuntu instances on AWS is

ubuntu - The SSH key pair you used to create the proxy should be registered and set up for agent forwarding:

eval `ssh-agent -s`

ssh-add ~/.ssh/yourkey.pemIf you have done all that, you should be able to get through to the example host:

╭─ubuntu@ip-172-31-1-44 ~

╰─➤ ssh -A ubuntu@18.130.203.68

ubuntu@ip-10-0-1-91:~$ ssh ubuntu@10.0.2.132

ubuntu@ip-10-0-2-132:~$In a separate window, you can SSH to the proxy instance to monitor the logs:

╭─ubuntu@ip-172-31-1-44 ~

╰─➤ ssh -A ubuntu@18.130.227.27

ubuntu@ip-10-0-1-19:/home/ubuntu# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4f0bfa799f10 karlhopkinsonturrell/squid-alpine "squid '-NYCd 1'" 2 minutes ago Up 2 minutes romantic_kilby

ubuntu@ip-10-0-1-19:/home/ubuntu# tail -F -n0 /var/log/squid/access.logMake Some Allowed Requests

ubuntu@ip-10-0-2-132:~$ curl http://www.amazonaws.com

<!DOCTYPE HTML PUBLIC "-//IETF//DTD HTML 2.0//EN">

<html><head>

<title>301 Moved Permanently</title>

</head><body>

<h1>Moved Permanently</h1>

<p>The document has moved <a href="http://aws.amazon.com">here</a>.</p>

</body></html>

ubuntu@ip-10-0-2-132:~$ curl https://sha512.badssl.com

<!DOCTYPE html>

<html>

<head>

<meta name="viewport" content="width=device-width, initial-scale=1">

<link rel="shortcut icon" href="/icons/favicon-green.ico"/>

<link rel="apple-touch-icon" href="/icons/icon-green.png"/>

<title>sha512.badssl.com</title>

<link rel="stylesheet" href="/style.css">

<style>body { background: green; }</style>

</head>

<body>

<div id="content">

<h1 style="font-size: 12vw;">

sha512.<br>badssl.com

</h1>

</div>

</body>

</html>The sha512.badssl.com domain is one of the test endpoints on the BadSSL service which offers up a valid SSL connection - the proxy is allowing through connections on both HTTP and HTTPS for domains in the whitelist.

The proxy should have logged these requests in the other window:

1533036187.502 580 10.0.2.132 TCP_REFRESH_MODIFIED/301 549 GET http://www.amazonaws.com/ - ORIGINAL_DST/72.21.210.29 text/html

1533036191.052 568 10.0.2.132 TCP_TUNNEL/200 1041 CONNECT 104.154.89.105:443 - ORIGINAL_DST/104.154.89.105 -Make Some Disallowed Requests

ubuntu@ip-10-0-2-132:~$ curl http://baddomain.com

...

<blockquote id="error">

<p><b>Access Denied.</b></p>

</blockquote>

<p>Access control configuration prevents your request from being allowed at this time. Please contact your service provider if you feel this is incorrect.</p>

...

ubuntu@ip-10-0-2-132:~$ curl https://baddomain.com

curl: (35) gnutls_handshake() failed: The TLS connection was non-properly terminated.

ubuntu@ip-10-0-2-132:~$ curl https://expired.badssl.com

curl: (60) server certificate verification failed. CAfile: /etc/ssl/certs/ca-certificates.crt CRLfile: noneRequests to a non-whitelisted domain are prevented, as is a connection to a whitelisted domain where the certificate has expired. The access.log and cache.log record look like this:

access.log

1533036283.207 85 10.0.2.132 TCP_DENIED/403 3842 GET http://baddomain.com/ - HIER_NONE/- text/html

1533036296.674 53 10.0.2.132 TAG_NONE/200 0 CONNECT 34.211.177.64:443 - HIER_NONE/- -

1533036336.350 380 10.0.2.132 TAG_NONE/200 0 CONNECT 104.154.89.105:443 - ORIGINAL_DST/104.154.89.105 -cache.log

2018/07/31 11:25:36| Error negotiating SSL on FD 16: error:14007086:SSL routines:CONNECT_CR_CERT:certificate verify failed (1/-1/0)Cleaning Up

Don’t forget to terraform destroy at the end so you don’t rack up the costs in your AWS account for EC2 servers you don’t need!

Also check out our post about writing reusable terraform modules for an AWS based infrastructure .

Insight, imagination and expertly engineered solutions to accelerate and sustain progress.

Contact