Augmenting user experiences using LLMS: an analysis of our travel AI agent application

Nearform ran a project aimed at assessing the potential of LLMs in redefining the travel experience

The rapid rise of Large Language Models (LLMs) like OpenAI’s GPT, Google’s Bard, Microsoft Bing and Anthropics’ Claude AI in less than half a year signifies their increasing prominence in the tech ecosystem.

With continuous innovations, the public’s intrigue has grown exponentially. This poses some crucial questions: How can businesses effectively utilise these LLMs to augment user experiences? Are these models game-changers, or just another tool in the developer’s kit?

To explore this, we initiated a project to assess the potential of LLMs in redefining the travel experience.

An overview of our Travel Agent application

Innovation is embedded in Nearform’s ethos. Upon GPT’s launch, our team identified an opportunity to integrate LLM services into a domain familiar to many – travel. This led to the birth of our AI-powered Travel Agent app.

Our journey commenced with ideation and exploration sessions, where we:

Compared the model’s response time with traditional APIs.

Examined cross-cutting concerns related to privacy, security and reliability.

Identified primary considerations for developing conversational user interfaces.

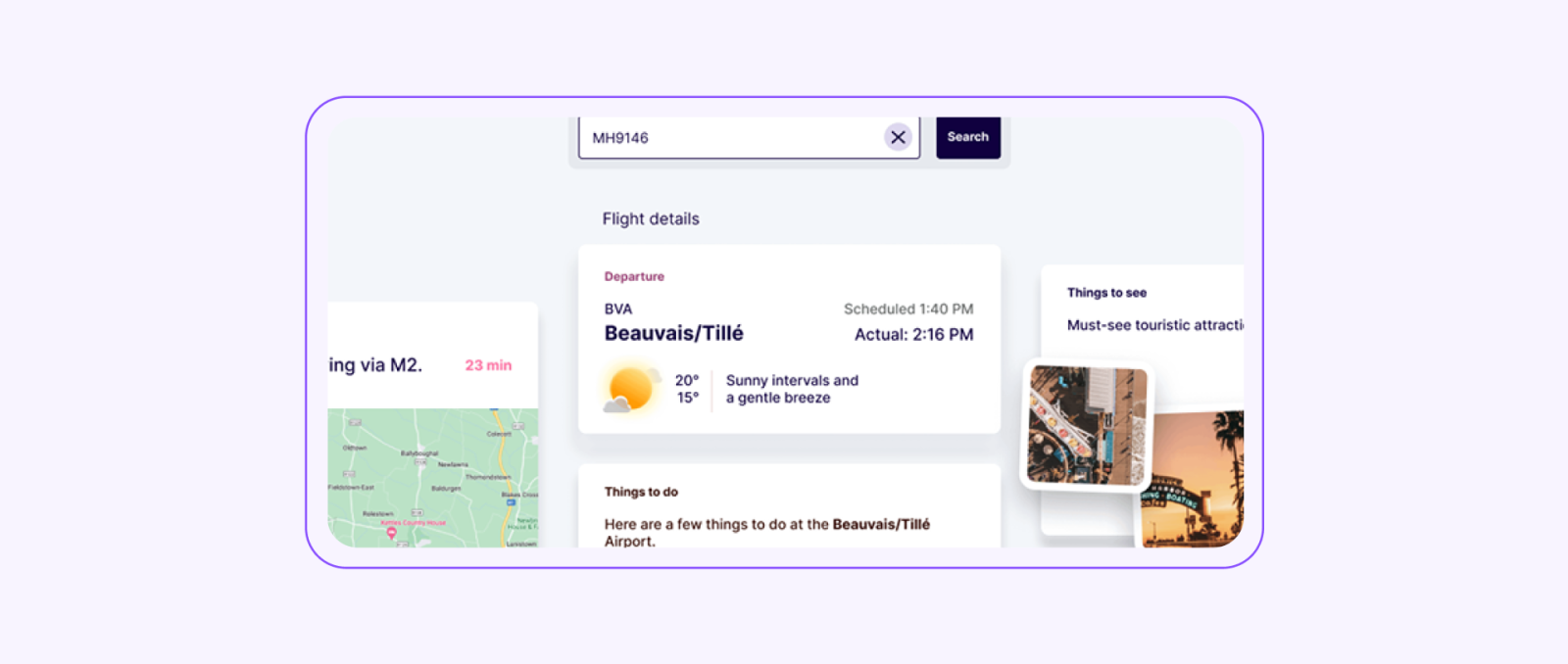

From our Discovery, we created a proof-of-concept — a web app that, upon inputting a flight ID, provides users with:

Detailed flight data via AeroDataBox API.

Departure and destination weather information is provided by WeatherAPI.com API.

Airport activity suggestions and destination recommendations, courtesy of OpenAI’s ChatGPT and our custom prompt engineering.

Directions from the user position to the departure airport using Google Maps API.

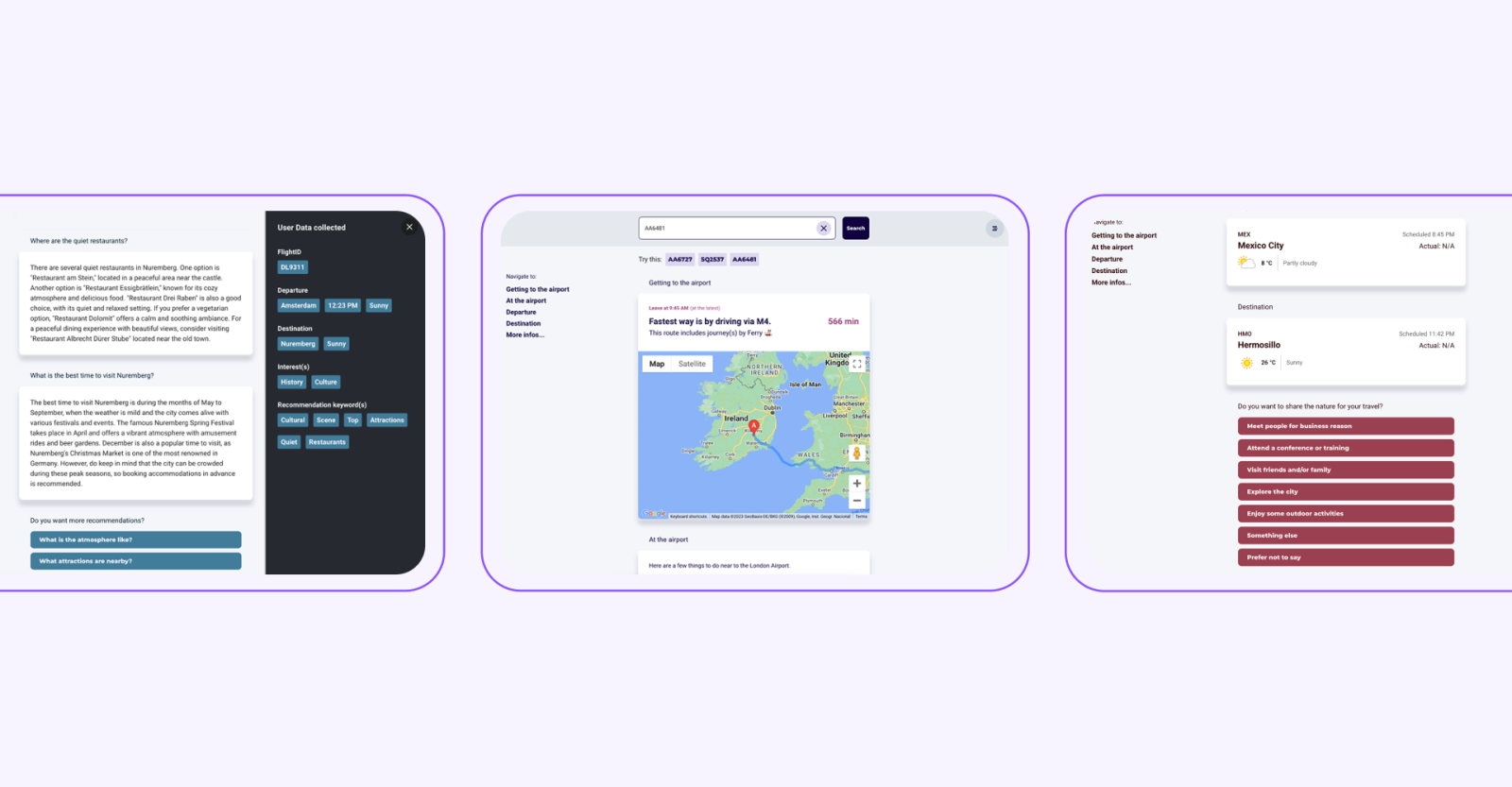

The interface is simple and composed of a single input for the flight number, a few cards for the results and a list of recommended questions to continue the conversation.

The application is composed of a React frontend and a Fastify backend. The communication between frontend and backend uses a WebSocket, which allows the information to be displayed as soon as the backend produces it.

The backend uses a couple of libraries to simplify the interaction with external services:

Rapid API is an API hub that provides an easy and convenient way to interact with multiple services via a common interface and a single API key. It is used to communicate with AeroDataBox and WeatherAPI.com.

LangChain is a framework that simplifies the interaction with OpenAI’s ChatGPT. It permits defining template prompts with custom inputs, selecting different language models (LLM or Chat models) and combining multiple operations to build complex tasks.

Explore the app here: https://ai-travel-agent.nearform.com/

Understanding the vast range of traveller needs and profiles, we intentionally narrowed our app’s scope to ensure efficacy in this prototype phase. Our primary objective was to focus on the user experience and unearth the potential and constraints of this integration. As a result, we identified four critical areas to address for delivering an optimal user experience:

Managing the slow response time,

Designing an effective conversational experience,

Addressing privacy, reliability and security concerns,

Carefully crafting GPT prompts.

Key takeaways

As you can imagine, there’s much we learned during our project to create a Travel AI Agent application. We’ve highlighted the key takeaways from our project below.

Managing LLM response time

LLMs, particularly GPT 3.5 Turbo, tend to have slower response times compared to traditional APIs. Mitigating this requires proactive user communication, outlining expected wait times and offering real-time feedback. New UX paradigms have emerged in that regard and it’s necessary to follow them as they’ve already become standards.

Designing an effective conversational experience

While conversational user experiences offer natural language interaction, ensuring a seamless user experience remains challenging. ChatBot experiences have notorious pitfalls — bots lack contextual understanding which leads to useless discussions; information provided can be lost in a long thread and hard to recall; people can’t amend a choice they made…

The intelligence provided by GPT out of the box solves some of these issues. We have leveraged GPT’s core features to:

Be succinct (summarise in 2 lines or 3 bullet points).

Be conversational (as a traveller, you want to do ‘x’).

Be proactive (recommend 3 follow-up questions people may want answers for).

A lot more should be done to avoid dead-end discussions and be useful.

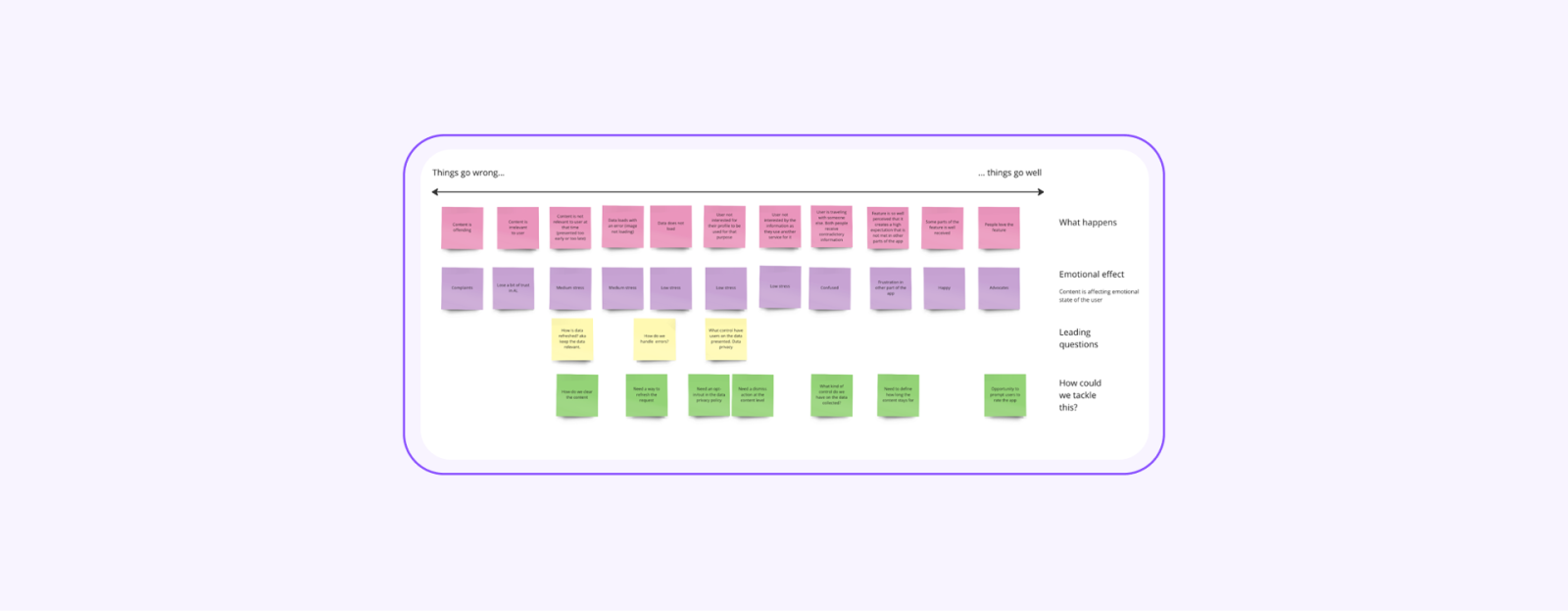

Addressing privacy, reliability and security concerns

GPT offers a set of guardrails out of the box but it’s not flawless. Recent instances like Microsoft AI’s controversial recommendations highlight the importance of risk management (Microsoft AI suggests food banks as a cannot miss tourist spot in Canada). We strategised by setting strict input parameters and prompts. For expansive projects, we advise inclusive testing and ethical considerations, especially if proprietary data is involved.

Carefully craft the ChatGPT prompt

The prompt cannot be arbitrary if you want specific results. Try to:

Start the prompt with the instructions: They should provide enough context, be detailed and minimise ambiguity.

Describe the structure of the response and provide some examples. Do you want a structured JSON object, a comma-separated list of words or sentences separated by newlines? In this way, the format of the returned information will be consistent, simplifying its manipulation by another program.

Test different ways to express the same instruction to get a better result. For example, if you want to extract keywords from a sentence, you can ask to “summarise the sentence using 3 words” or to “extract a list of keywords from it“. See if and how the results change.

Even with tailored prompts, the feedback from the system quite often lacks depth and provides fairly generic information.

The evolving landscape of LLMs

With industry leaders like Expedia and Google embracing LLM-powered solutions, users are now expecting even swifter and more refined insights. Avoiding LLMs won’t be an option for organisations across the globe.

The capabilities of LLMs like GPT are burgeoning but huge. The perks they extend to product teams are manifold:

Contextual insights

Answers to complex queries

Suggested related data

All these perks can be enjoyed without draining resources on technical undertakings. However, the advantages come with trade-offs — prolonged user wait times and compromised control mechanisms.

So, what’s next?

Our journey into exploring the future of travel has been fulfilling. LLMs like GPT-3.5 offer a wealth of possibilities in user experience design and development. Yet, it’s evident that meticulous user-centric design, especially in crafting prompts, remains pivotal.

Companies aiming to leverage LLMs like GPT must adhere to core human-computer interaction tenets. Involving sector professionals and users in the design process is vital to harness their insights and craft a truly valuable experience.

Today, the ability to implement efficient, affordable and sustainable AI has become a key differentiator between organisations that thrive and outperform their peers and those that don’t. If you’re looking for a partner to take your organisation to the next level, our seasoned Data, Design and AI experts can help you rapidly design and deliver generative AI solutions.

Insight, imagination and expertly engineered solutions to accelerate and sustain progress.

Contact